Finding Out (Approximately) How Long A Calculation Contributes To The Duration Of An MDX Query

Reposted from Chris Webb's blog with the author's permission.

In my last two blog posts (see here and here) I showed how to use the Calculation Evaluation and Calculation Evaluation Detailed Information trace events to work out which MDX calculations are evaluated when a query runs in Analysis Services Multidimensional. That’s very useful, but wouldn’t it be great if you could work out how long any single calculation contributes to the overall duration of a query? If you could, it would make performance tuning MDX calculations much easier.

While you can’t get an exact amount of time taken for each calculation, the good news is that it is possible to get a duration rounded to the next second if your calculation is evaluated in bulk mode.

Take a look at the following query:

WITH

MEMBER MEASURES.DAYRANK AS

RANK(

[Date].[Date].CURRENTMEMBER,

[Date].[Date].[Date].MEMBERS)-1

MEMBER MEASURES.HADSALE AS

IIF(

[Measures].[Internet Sales Amount]=0,

NULL,

MEASURES.DAYRANK)

MEMBER MEASURES.LASTSALERANK AS

MAX(

NULL:[Date].[Date].CURRENTMEMBER,

MEASURES.HADSALE)

MEMBER MEASURES.LASTSALE AS

([Measures].[Internet Sales Amount],

[Date].[Date].[Date].MEMBERS.ITEM(MEASURES.LASTSALERANK))

MEMBER MEASURES.SIMPLECALC AS

[Measures].[Internet Sales Amount] * 2

SELECT

HEAD([Customer].[Customer].[Customer].MEMBERS, 200)

*

{MEASURES.SIMPLECALC, MEASURES.LASTSALE}

ON 0,

[Date].[Date].[Date].MEMBERS

ON 1

FROM

[Adventure Works]

This query contains five calculated measures: the first four in the WITH clause, DAYRANK, HADSALE, LASTSALERANK and LASTSALE, are based on my approach for finding the last ever non-empty value for a measure across time; the final measure, SIMPLECALC, is as the name suggests a very simple calculation. On my laptop this query takes around 13 seconds to run on my laptop, on a warm Storage Engine cache. Why does it take so long? It’s clearly the calculations that are the problem, but which one(s)?

Luckily all of the calculations in this query are evaluated in bulk mode so, as I discussed in my last two posts, there is an event raised with:

Event Class = Calculation Evaluation Detailed Information

Event Subclass = 107 – RunEvalNode Finished Calculating Item

…for each of the calculations when they are evaluated. Unfortunately the Duration column for this event always shows 0, but there is a way to see approximately how long the calculation took by comparing the Start Time and Current Time columns in the trace.

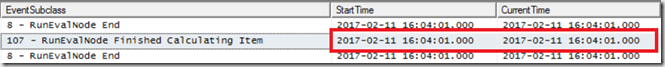

The 107 – RunEvalNode event for the measure SIMPLECALC shows the same time for the Start Time and Current Time columns:

This indicates that the SIMPLECALC calculation is evaluated in under a second.

However, sequence of 107 – RunEvalNode events for the LASTSALE calculation shows something different:

There’s a gap of 7 seconds between the StartTime and the CurrentTime, and this indicates that the calculation took 7 seconds to evaluate. It’s a bit frustrating that there isn’t a way to get a more accurate duration here, but it’s still very clear which calculation is taking all the time. Even though the time for calculating LASTSALE includes the time taken for calculating LASTSALERANK, HADSALE and DAYRANK (all of which need to be calculated in order to calculation LASTSALE), the equivalent rows in the trace for these other calculations show they took under a second each. It’s only the logic inside LASTSALE itself that is slow – so that’s where any tuning needs to take place. Indeed, modifying the query to return LASTSALERANK instead of LASTSALE makes the query faster by around 6 seconds, supporting this conclusion.

If you’re curious about what the other 6 seconds of the query execution time is taken up by, it seems like it’s serialisation of the results – something I blogged about here. The query returns a cellset with 400*1190=476000 cells in, and SSAS doesn’t cope well with queries that return a large amount of data.

|

Chris has been working with Microsoft BI tools since he started using beta 3 of OLAP Services back in the late 90s. Since then he has worked with Analysis Services in a number of roles (including three years spent with Microsoft Consulting Services) and he is now an independent consultant specialising in complex MDX, Analysis Services cube design and Analysis Services query performance problems. His company website can be found at http://www.crossjoin.co.uk and his blog can be found at http://cwebbbi.wordpress.com/ . |

Tags: mdx, performance