Solving the ‘Events in Progress’ problem in MDX, Part 2–Role Playing Measure Groups

Reposted from Chris Webb's blog with the author's permission.

In my last post I described a simple solution to the ‘events in progress’ problem in SSAS and MDX, which nonetheless had one drawback: it involved loading all the data from the same fact table into two different measure groups in the same cube, which of course potentially doubles the amount of time taken to process the cube and its size on disk. I used the same technique in my recent post on improving the performance of currency conversion, and indeed it’s a technique that I have used in the past with several customers successfully; but it seems rather inelegant, so is there a way we can avoid doing it and only have one measure group? It doesn’t seem to be possible at first glance and I actually opened a Connect a while ago asking for this functionality (‘role playing measure groups’) to be implemented. I was having a good week last week, though, and at the same time as solving the ‘events in progress’ problem I also came up with a solution to this problem too…

Here’s what I did, using the ‘events in progress’ problem as an illustration:

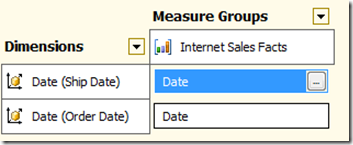

First of all, I created and processed a simple cube called ‘EventsInProgressSource’ with a single measure group, a single Count measure, and two dimensions, Order Date and Ship Date having regular relationships joining on the OrderDateKey and ShipDateKey columns on the fact table:

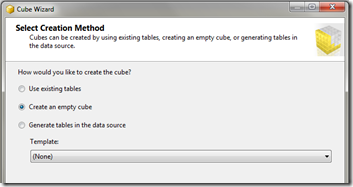

I then created a second cube, ‘EventsInProgress2’, using the ‘Create Empty Cube’ option in the Cube Wizard.

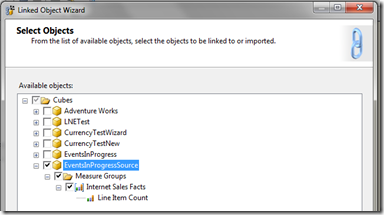

I then started the New Linked Object wizard and copied everything from the ‘EventsInProgressSource’ cube to the EventsInProgress2 cube:

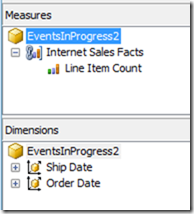

This resulted in a cube with one linked measure group and two linked dimensions:

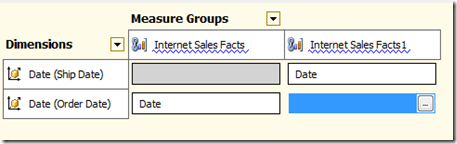

Now here’s the fun bit. I then deployed and processed the cube, closed BIDS and went to SQL Management Studio. There I scripted the Internet Sales Facts linked measure group in ‘EventsInProgress2’ to an XMLA Create statement, then manually updated the XMLA by adding a 1 to the end of the name and ID of the object itself and the Line Item Count measure, then executed the script against the ‘EventsInProgess2’ cube. This created a second, identical linked measure group – something that again BIDS doesn’t let you do. I then reopened BIDS and connected direct to the cube in online mode (I could also have reimported the project back into BIDS) and went to the Dimension Usage tab, then deleted the relationship between Ship Date and the first linked measure group and Order Date and the second linked measure group, leaving the relationships like this:

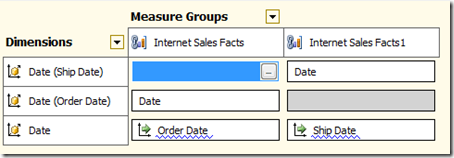

I then added another Date dimension and set up referenced relationships (which had to be non-materialised) with each measure group via the Date attributes of the Ship Date and Order Date dimensions:

With this done we have achieved out goal: we have the same fact table appearing twice in the same cube as two different measure groups with different dimensionality, but we are only processing the data once. The last step to solve the ‘events in progress’ problem is to add what is essentially the same MDX as last time to the cube:

CREATE MEMBER CURRENTCUBE.MEASURES.OrderedToDate AS

SUM(NULL: [Date].[Calendar].CURRENTMEMBER,

([Measures].[Line Item Count]));

CREATE MEMBER CURRENTCUBE.MEASURES.ShippedToPrevDate AS

SUM(NULL: [Date].[Calendar].CURRENTMEMBER.PREVMEMBER,

([Measures].[Line Item Count1]));

CREATE MEMBER CURRENTCUBE.MEASURES.OrderedNotShipped AS

MEASURES.OrderedToDate – MEASURES.ShippedToPrevDate;

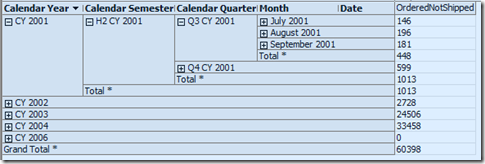

And we get the results we want out of the cube:

Now I haven’t tested this with anything other than the Adventure Works data, and there are some areas where I’d like to do more testing:

- Non-materialised reference relationships don’t always perform that well. Materialised reference relationships aren’t allowed with linked measure groups though, so possibly using a m2m relationship to join the Date and Order Date/Ship Date dimensions might be an alternative

- There are various ways of calculating a total-to-date in MDX and I’ve not spent any time working out if the version I’ve shown above is the most efficient.

- There is going to be an overhead from querying a linked measure group rather than a regular measure group, and also probably an overhead from trying to query the same source measure group twice rather than two different measure groups, but I don’t know how significant it is.

If anyone out there does decide to try this at scale with their own data, please leave a comment and tell me about your experiences.

|

Chris has been working with Microsoft BI tools since he started using beta 3 of OLAP Services back in the late 90s. Since then he has worked with Analysis Services in a number of roles (including three years spent with Microsoft Consulting Services) and he is now an independent consultant specialising in complex MDX, Analysis Services cube design and Analysis Services query performance problems. His company website can be found at http://www.crossjoin.co.uk and his blog can be found at http://cwebbbi.wordpress.com . |